The Essential AI Terms You Need to Know

As AI continues to transform industries and everyday life, understanding the language of this rapidly evolving field is essential. Whether you’re a student, a professional, or simply curious about AI, this resource will help you navigate the important artificial intelligence terms and concepts. From foundational terms like “machine learning” and “neural networks” to specialized concepts such as “transfer learning” and “algorithmic bias,” our lexicon provides clear definitions and insights. Dive in to enhance your knowledge and stay informed about the exciting world of artificial intelligence!

Machine Learning

A type of computer technology that helps machines learn from data. Instead of being programmed for specific tasks, these systems improve their performance by analyzing more information. People use it in many areas, like speech recognition, image classification, and recommendations. It allows computers to recognize patterns and make decisions without direct human input.

Algorithm

Step-by-step instructions a computer follows to solve a problem or complete a task. They process data and perform calculations to reach a desired outcome. They’re the building blocks of computer programs and help make decisions based on given inputs.

Bias

Systematic errors occurring when a model makes incorrect assumptions about data. These can lead to unfair or inaccurate predictions, especially if the training data does not represent the real world. AI bias can affect the outcomes of machine learning models and influence how they behave in different situations.

Cross-Validation

A technique used to evaluate how well a model performs on unseen data. It involves dividing the data into different subsets, training the model on some of them, and testing it on the remaining parts. This method helps ensure that the model generalizes well and reduces the risk of overfitting the training data.

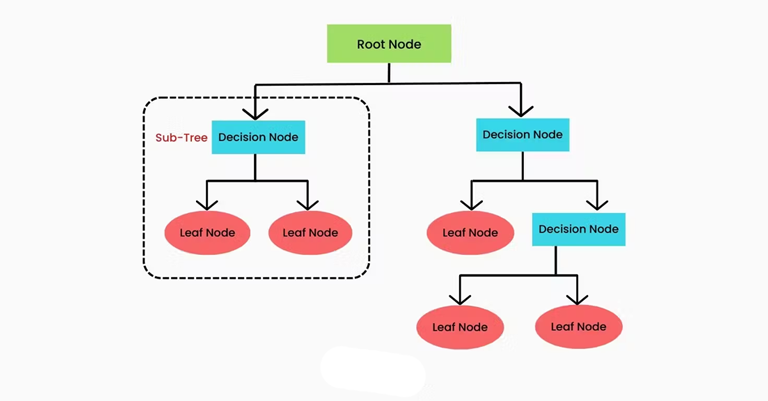

Decision Tree

A model used to make decisions based on a series of questions. It resembles a tree structure, where each branch represents a possible decision or outcome. Decision trees help visualize the decision-making process and can handle both numerical and categorical data, making them useful for classification and regression tasks.

Ensemble Learning

A method that combines multiple models to improve overall performance. Instead of relying on a single model, ensemble learning uses various algorithms to make predictions. By aggregating the results from these models, it can reduce errors and enhance accuracy, often leading to better results than any individual model alone.

Feature Selection

The process of choosing the most important variables from a dataset to use in a model. It helps improve the model’s performance by removing irrelevant or redundant information. By focusing on key features, this technique reduces complexity and enhances the model’s accuracy.

Model Evaluation

The process of assessing how well a machine learning model performs. It involves using metrics and techniques to measure accuracy, precision, recall, and other factors. Effective evaluation helps determine if a model is suitable for making predictions on new data.

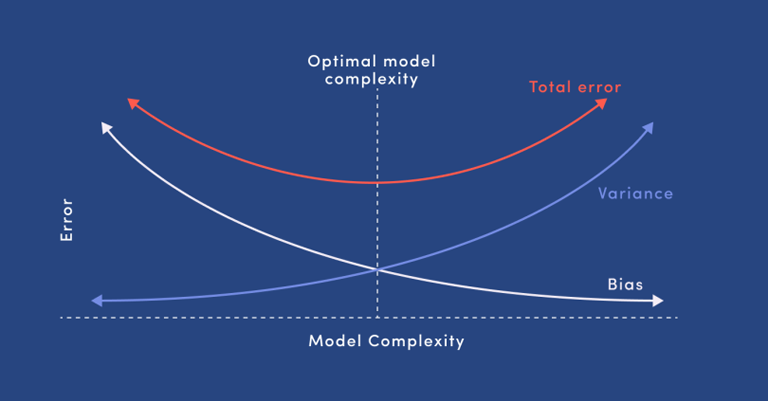

Overfitting

When a model learns too much from the training data, including noise and outliers, it can lead to overfitting. As a result, it performs well on the training set but poorly on new, unseen data. Overfitting leads to models that are too complex, making them less effective in real-world applications.

Reinforcement Learning

A type of machine learning where an agent learns to make decisions by interacting with an environment. The agent receives feedback in the form of rewards or penalties based on its actions. Over time, it learns to choose actions that maximize cumulative rewards, enabling it to improve its performance.

Supervised Learning

A type of machine learning where a model is trained on labeled data. In this approach, the model learns to make predictions based on input-output pairs. The goal is to map inputs to the correct outputs, allowing the model to generalize and make accurate predictions on new data.

Training Data

A set of examples used to teach a machine learning model. It includes input-output pairs that help the model learn patterns and make predictions. The quality and quantity of training data directly affect the model’s performance and accuracy.

Unsupervised Learning

A type of machine learning where a model learns from data without labeled outputs. In this approach, the model tries to find patterns, groupings, or structures within the data on its own. It is useful for tasks like clustering and anomaly detection.

Underfitting

Occurs when a model is too simple to capture the underlying patterns in the data. As a result, it performs poorly on both the training set and new data. Underfitting happens when the model lacks complexity or does not use enough relevant features.

Variance

A model’s sensitivity to small changes in the training data. High variance means the model pays too much attention to noise and outliers, leading to overfitting. A model with low variance is more stable and generalizes better to new, unseen data.

Natural Language Processing (NLP)

A field of artificial intelligence that focuses on the interaction between computers and human language. It enables machines to understand, interpret, and generate text or speech in a way that is meaningful to people. Natural Language Processing combines linguistics and computer science to analyze language patterns, allowing applications like chatbots, translation services, and sentiment analysis. By processing large amounts of text data, NLP helps computers communicate more effectively with humans and improve user experiences.

Attention Mechanism

A technique used in machine learning that allows models to focus on specific parts of input data when making predictions. By weighing different elements differently, it helps the model understand the context and relevance of each word in a sentence. This approach improves performance in tasks like translation and text summarization.

BERT (Bidirectional Encoder Representations from Transformers)

A powerful language model designed to understand the context of words in a sentence. It processes text in both directions, looking at words before and after a target word. BERT helps improve tasks like question answering and sentiment analysis by capturing deeper meanings in language.

Chatbot

A computer program that simulates conversation with users through text or voice. Chatbots use natural language processing to understand questions and provide relevant responses. They are commonly used in customer service, information retrieval, and personal assistance to enhance user interaction and support.

Lemmatization

The process of reducing words to their base or root form. Unlike stemming, which simply removes endings, lemmatization considers the word’s meaning and grammatical role. For example, “running” becomes “run,” and “better” becomes “good.” This technique helps improve the accuracy of natural language processing tasks.

Language Model

A statistical model that predicts the likelihood of a sequence of words. It helps computers understand and generate human language by calculating the probability of a word given its context. Language models are crucial for speech recognition, machine translation, and text generation.

Named Entity Recognition (NER)

A process in natural language processing that identifies and classifies key elements in text into predefined categories. These categories often include names of people, organizations, locations, dates, and more. NER helps systems understand important information within a text, making it useful for tasks like information extraction and content organization.

Part-of-Speech Tagging

Labeling words in a sentence with their corresponding parts of speech, such as nouns, verbs, adjectives, and adverbs. By identifying the grammatical roles of words, this technique helps computers understand sentence structure and meaning, aiding in tasks like translation and text analysis.

Semantic Analysis

Understanding the meaning of words, phrases, and sentences in context. It goes beyond the surface structure of language to capture relationships and meanings between concepts. Semantic analysis helps improve tasks such as question answering, summarization, and information retrieval by ensuring that the intended meaning is accurately represented.

Sentiment Analysis

The process of determining the emotional tone behind words in text. It helps identify whether a piece of writing expresses positive, negative, or neutral feelings. Businesses often use sentiment analysis to gauge customer opinions and reactions from social media, reviews, and surveys.

Stemming

A technique used to reduce words to their base or root form by removing prefixes and suffixes. For example, “running,” “runner,” and “ran” may all be reduced to “run.” Stemming simplifies text processing and helps improve the performance of natural language processing tasks, although it may sometimes produce non-dictionary words.

Text Classification

The process of categorizing text into predefined labels or categories based on its content. It involves training a model to recognize patterns in the text data, enabling applications like spam detection, topic labeling, and sentiment classification. By analyzing the features of the text, the model can make accurate predictions about its category.

Text Generation

The creation of new text based on certain input or context. Using models trained on large datasets, machines can produce coherent and contextually relevant sentences, paragraphs, or even entire articles. Applications include chatbots, story generation, and automatic content creation, allowing for creative and dynamic communication.

Tokenization

The process of breaking down text into smaller units, called tokens, which can be words, phrases, or even characters. Tokenization helps prepare text for analysis by simplifying complex strings into manageable parts. It is a crucial step in natural language processing, enabling further processing like classification and translation.

Transformer

A type of model architecture used in natural language processing that relies on self-attention mechanisms. They process input data in parallel rather than sequentially, allowing faster and more efficient training. They have revolutionized NLP tasks by improving the performance of language models like BERT and GPT.

Word Embeddings

A technique that represents words as numerical vectors in a continuous vector space. Word embeddings capture semantic meanings and relationships between words, allowing similar words to have closer vector representations. This technique enhances the performance of various NLP tasks, such as sentiment analysis and machine translation.

Computer Vision

A field of artificial intelligence that enables computers to interpret and understand visual information from the world. It involves the development of algorithms and models that allow machines to analyze images and videos, identifying objects, detecting patterns, and extracting meaningful information. Applications of computer vision include facial recognition, autonomous vehicles, medical image analysis, and augmented reality. By mimicking human visual perception, computer vision helps machines make sense of visual data and interact with their environment more effectively.

Convolutional Neural Network (CNN)

A type of deep learning model specifically designed for processing visual data. It uses layers of convolutional filters to automatically detect and learn features from images, such as edges, shapes, and textures. Convolutional Neural Networks are widely used in tasks like image classification, object detection, and computer vision applications due to their ability to capture spatial hierarchies in data.

Depth Perception

The ability to perceive the distance and three-dimensional structure of objects in the environment. It enables humans and machines to understand spatial relationships and the relative positions of objects. In computer vision, depth perception is achieved using techniques like stereo vision, depth sensors, and image analysis, allowing systems to interact more effectively with their surroundings.

Facial Recognition

A technology that identifies and verifies individuals by analyzing their facial features. It works by detecting a face in an image or video and then comparing it to a database of known faces. Applications include security systems, user authentication, and social media tagging, allowing for enhanced identification and personalization.

Feature Extraction

The process of identifying and selecting relevant information from raw data to improve the performance of machine learning models. In computer vision, feature extraction involves detecting key points, edges, or patterns in images that can be used for further analysis, classification, or recognition tasks. It simplifies data representation and reduces dimensionality while retaining essential information.

Generative Adversarial Network (GAN)

A type of deep learning model that consists of two competing networks: a generator and a discriminator. The generator creates new data samples, while the discriminator evaluates them against real data. This adversarial process allows GANs to produce high-quality images, audio, and other data, making them useful in applications like image synthesis, video generation, and style transfer.

Image Augmentation

Techniques used to artificially expand a dataset by creating modified versions of existing images. Common methods include rotating, flipping, scaling, and changing brightness or color. Image augmentation helps improve the robustness of machine learning models by providing more diverse training examples, reducing the risk of overfitting.

Image Classification

The process of assigning a label or category to an image based on its content. A machine learning model is trained on a dataset with labeled images to recognize patterns and features. Once trained, the model can predict the category of new, unseen images, making it useful in applications like facial recognition and object categorization.

Image Processing

Manipulating and analyzing images to enhance their quality or extract useful information. Techniques in image processing can include filtering, resizing, noise reduction, and contrast adjustment. It serves as a foundation for various applications, including computer vision, medical imaging, and photography.

Image Segmentation

The process of dividing an image into meaningful regions or segments for easier analysis. Each segment corresponds to a specific object or area within the image. Image segmentation is crucial in applications like medical imaging, autonomous vehicles, and scene understanding, as it allows systems to identify and interact with individual objects.

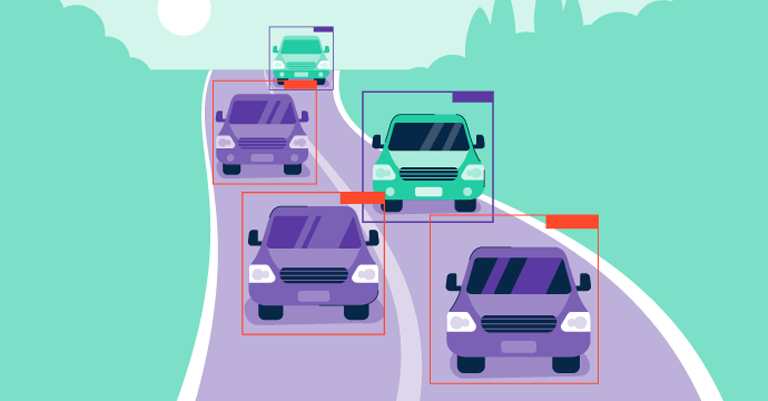

Object Detection

The task of identifying and locating objects within an image or video. It involves recognizing the objects and determining their positions using bounding boxes. Object detection is widely used in applications like surveillance, autonomous driving, and image analysis, enabling machines to understand complex scenes.

Optical Character Recognition (OCR)

A technology that converts different types of documents, such as scanned paper documents or images, into editable and searchable text. OCR analyzes the shapes of characters and uses machine learning algorithms to recognize letters and words. It is widely used for digitizing printed materials, automating data entry, and enhancing accessibility.

Pose Estimation

The process of determining the position and orientation of a person or object in an image or video. It involves identifying key points, such as joints or body parts, to create a representation of the pose. Pose estimation is used in applications like human-computer interaction, sports analysis, and animation.

Scene Understanding

The process of analyzing and interpreting the elements within an image or video to gain insights about the environment. It includes recognizing objects, their relationships, and the overall context of the scene. Scene understanding is essential for applications such as autonomous driving, robotics, and augmented reality, as it helps machines interact intelligently with their surroundings.

Video Analysis

The process of examining video content to extract meaningful information. It involves techniques like object tracking, activity recognition, and motion detection. Video analysis is used in various applications, including surveillance, sports analytics, and human behavior understanding, enabling systems to make sense of dynamic visual data.

Visual Recognition

The ability of a system to identify and classify objects or patterns in images or videos. It encompasses various tasks, such as image classification, object detection, and facial recognition. Visual recognition enables machines to interpret visual information, making it essential for applications in security, autonomous vehicles, and content management.

AI Ethics and Policy

The moral implications and regulatory aspects of artificial intelligence technology. It examines how AI impacts society, individuals, and organizations, focusing on issues like fairness, accountability, transparency, and privacy. AI ethics emphasizes the importance of developing AI systems that are unbiased and respect user rights. Policymakers work to create guidelines and regulations to ensure responsible AI development and use, promoting safety and ethical standards while balancing innovation with societal needs. This area is essential for building trust in AI technologies and ensuring they benefit everyone.

Accountability

The obligation of individuals and organizations to take responsibility for their actions and decisions, especially regarding AI systems. It ensures that there are clear processes and consequences for how AI technologies are developed and used. Accountability promotes trust and encourages ethical practices in AI development.

Algorithmic Bias

When an AI system produces results that are systematically unfair or prejudiced due to flawed assumptions in its algorithms or training data. Algorithmic bias can lead to discrimination against certain groups based on factors like race, gender, or socioeconomic status. Addressing this issue is crucial for developing fair and equitable AI systems.

Consent

The agreement of individuals to allow their data to be collected and used by AI systems. Consent ensures that users are informed about how their information will be utilized, promoting transparency and respect for personal privacy. Ethical practices require that consent be obtained freely and clearly, allowing individuals to make informed choices.

Data Privacy

The protection of personal information collected by organizations, especially in the context of AI and digital technologies. Data privacy ensures that individuals have control over their data and how it is used, preventing unauthorized access and misuse. Strong data privacy measures are essential for building trust and safeguarding user rights.

Ethical AI

The development and deployment of AI technologies in a manner that is fair, transparent, and respectful of human rights. Ethical AI aims to create systems that minimize harm, promote inclusivity, and enhance social good. It encourages developers and organizations to consider the broader implications of their AI systems and to prioritize ethical considerations throughout the AI lifecycle.

Explainability

The ability of AI systems to provide clear and understandable explanations for their decisions and actions. Explainability helps users comprehend how a model arrived at a particular outcome, making it easier to trust and validate its results. This is especially important in high-stakes applications like healthcare and finance, where understanding the reasoning behind decisions is crucial.

Fairness

The practice of ensuring that AI systems operate without bias and treat all individuals and groups equitably. Fairness aims to prevent discrimination based on characteristics such as race, gender, or socioeconomic status. Developing fair AI systems involves assessing and addressing biases in data and algorithms to promote just outcomes for all users.

Human Oversight

The involvement of human judgment in the operation and decision-making processes of AI systems. Human oversight ensures that there are checks and balances in place, allowing people to review, validate, and intervene in automated decisions when necessary. This practice enhances accountability and helps mitigate potential risks associated with AI.

Regulation

The establishment of rules and guidelines by governmental or authoritative bodies to govern the development and use of AI technologies. Regulation aims to protect users’ rights, ensure ethical practices, and promote safety in AI applications. Effective regulation balances innovation with accountability, helping to foster trust in AI systems.

Transparency

The act of providing clear information about how AI systems work, including their underlying processes, data sources, and decision-making criteria. Transparency helps users understand the capabilities and limitations of AI technologies, fostering trust and accountability. It is essential for ethical AI development and encourages responsible use of technology.

Deep Learning

A subset of machine learning that uses deep neural networks with many layers to analyze data and make decisions. Deep learning models are designed to automatically learn representations from raw data, allowing them to identify complex patterns and features. These models excel in tasks such as image and speech recognition, natural language processing, and game playing. By processing large amounts of data, deep learning enables significant advancements in AI applications, often achieving higher accuracy than traditional machine learning methods.

Activation Function

A mathematical function applied to the output of a neuron in a neural network. It determines whether the neuron should be activated or not, introducing non-linearity into the model. Common activation functions include ReLU (Rectified Linear Unit), sigmoid, and tanh. They play a crucial role in helping neural networks learn complex patterns.

Autoencoder

A type of neural network designed to learn efficient representations of data by compressing it into a lower-dimensional space and then reconstructing it. An autoencoder consists of an encoder that reduces the data and a decoder that reconstructs the original input. It is often used for tasks like data compression, denoising, and feature extraction.

Backpropagation

An algorithm used to train neural networks by calculating the gradient of the loss function with respect to each weight in the network. It works by propagating the error backward through the layers, allowing the model to adjust its weights to minimize the loss. Backpropagation is essential for optimizing the performance of neural networks.

Batch Normalization

A technique used to improve the training speed and stability of deep learning models. It normalizes the inputs of each layer to have a mean of zero and a variance of one. By doing this, batch normalization helps reduce internal covariate shift and allows for higher learning rates, leading to faster convergence during training.

Convolution

A mathematical operation used in convolutional neural networks (CNNs) to extract features from input data, such as images. It involves applying a filter (or kernel) to the input to produce a feature map that highlights important patterns. Convolution helps the model learn spatial hierarchies and is fundamental for tasks like image recognition and processing.

Dropout

A regularization technique used in neural networks to prevent overfitting. During training, a certain percentage of neurons are randomly “dropped out” or ignored, meaning they do not contribute to the forward pass or backpropagation. This forces the network to learn more robust features by not relying on any specific neuron, improving its generalization to new data.

Fine-tuning

The process of making small adjustments to a pre-trained model to improve its performance on a specific task. Fine-tuning typically involves training the model on a smaller, task-specific dataset while keeping the learned parameters mostly intact. This approach saves time and resources, as the model has already learned general features from a larger dataset.

Generative Models

Models that can create new data samples from learned distributions. They are trained to understand the underlying patterns in the training data and can generate new instances that resemble that data. Common examples include Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), used in applications like image generation and text synthesis.

Gradient Descent

An optimization algorithm used to minimize the loss function in machine learning models. It works by calculating the gradient (or slope) of the loss function with respect to the model parameters and adjusting the parameters in the opposite direction of the gradient. By iteratively updating the parameters, gradient descent aims to find the optimal values that reduce the loss.

Hyperparameter Tuning

The process of optimizing the settings or configurations of a machine learning model that are not learned from the data itself. Hyperparameters, such as learning rate, batch size, and number of layers, influence the training process and performance. Tuning these parameters involves testing different combinations to find the best configuration for the model, often using techniques like grid search or random search.

Long Short-Term Memory (LSTM)

A type of recurrent neural network (RNN) designed to learn from sequences of data while addressing the issue of vanishing gradients. LSTMs have special units called memory cells that can maintain information over long periods, making them effective for tasks like speech recognition, language modeling, and time series prediction. They can remember important information and forget irrelevant data, enabling better handling of sequential dependencies.

Multilayer Perceptron (MLP)

A type of feedforward neural network consisting of multiple layers of nodes, including an input layer, one or more hidden layers, and an output layer. Each node in one layer is connected to every node in the next layer, allowing the MLP to learn complex patterns in data. MLPs are commonly used for tasks such as classification and regression.

Neural Network

A computational model inspired by the structure of the human brain, consisting of interconnected nodes (neurons) organized into layers. Neural networks process input data through these layers, applying weights and activation functions to learn and make predictions. They are fundamental to many machine learning applications, including image recognition, natural language processing, and more.

Pooling Layer

A layer in a convolutional neural network (CNN) that reduces the spatial dimensions of the input feature map. Pooling layers help summarize the information by downsampling, typically using operations like max pooling or average pooling. This process decreases the computational load and helps make the model more invariant to small translations in the input.

Pre-trained Model

A machine learning model that has already been trained on a large dataset before being used for a specific task. Pre-trained models capture general features and knowledge from the training data, allowing users to fine-tune them for new tasks with smaller datasets. This approach saves time and resources while often improving performance in tasks like image classification and natural language processing.

Recurrent Neural Network (RNN)

A type of neural network designed for processing sequences of data by using loops to allow information to persist. Unlike traditional feedforward networks, RNNs maintain a hidden state that captures information from previous inputs, making them suitable for tasks like time series prediction, natural language processing, and speech recognition. However, RNNs can struggle with long-term dependencies due to issues like vanishing gradients.

Regularization

A technique used in machine learning to prevent overfitting by adding a penalty to the loss function. Regularization methods, such as L1 (Lasso) and L2 (Ridge) regularization, help keep the model weights small and reduce complexity. By discouraging overly complex models, regularization improves the model’s ability to generalize to new, unseen data.

Self-Supervised Learning

A type of machine learning where the model learns to predict parts of the input data from other parts without needing labeled data. In self-supervised learning, the model generates its own supervisory signals by creating tasks from the data itself, such as predicting the next word in a sentence or filling in missing parts of an image. This approach helps the model learn useful representations and features from large datasets without extensive labeling.

Transfer Learning

A technique that involves taking a pre-trained model developed for one task and fine-tuning it for a different but related task. By leveraging the knowledge gained from the original task, transfer learning allows the model to adapt to new challenges with less data and training time. It is particularly effective in fields like computer vision and natural language processing, where pre-trained models can be adapted to specific applications.